Services that we do here to explain

Read case studies

Automated Web Scraping & AI Data Extraction

Client Background

The client needed a streamlined solution to collect and process part number data from multiple websites. Their existing method relied on manual data entry, which was slow, prone to errors, and increasingly difficult to manage as the volume of information grew. Extracting, analyzing, and organizing this data required significant time and effort, limiting their ability to make timely decisions. To address these challenges, they required automated web scraping tools capable of mechanizing these tasks while ensuring accuracy and adaptability.

Challenges & Goals

The following were some of the challenges and goals of the project:

Challenges

Collecting part number data manually was time-consuming and required a considerable amount of effort. This method not only slowed down the process but also led to inconsistencies, making it difficult to maintain accuracy.

Many websites posed additional challenges, such as requiring logins, incorporating captchas, and using dynamically loaded content, all of which complicated data extraction. These barriers made it difficult to gather information efficiently and required constant manual adjustments.

Even when data was successfully retrieved, it often lacked a structured format. This made it challenging to compare and analyze, further slowing down decision-making processes. As the need for data grew, the limitations of manual collection became even more apparent, highlighting the necessity for a more effective and scalable approach.

Goals

The first goal of the project was to create a system for web scraping using Selenium, SerpApi, and Python to collect part number data from multiple websites. By automating this process, the aim was to reduce reliance on manual entry and improve the reliability of data collection.

Another key objective was to apply AI-based processing to analyze and organize the extracted data. The system needed to identify alternate and equivalent part numbers, allowing for a more comprehensive understanding of available components and their relationships.

Ensuring data retrieval remained accurate and consistent despite website restrictions was also a priority. The question was: How to bypass captchas in web scraping? The solution also had to navigate logins and dynamically loaded content without disrupting the flow of information.

Finally, the extracted data needed to be presented in structured formats, such as CSV and Google Sheets. This would allow for seamless integration into the client’s existing workflows, making the information easily accessible and actionable.

Conclusion

This project improved how the client collects and processes data, replacing manual methods with an automated system that organizes and structures information effectively. By combining web scraping with AI, Relu Consultancy provided a reliable solution tailored to the client’s needs. The result was a more accessible, accurate, and manageable data collection process, allowing for better decision-making and reduced workload.

Implementation & Results

A custom web scraping workflow was built using SerpApi, Selenium, and Python. The system was designed to handle various website structures, extract part numbers accurately, and minimize errors. With this approach, data retrieval became faster and required less manual input.

AI-Powered Data Structuring

Once the data was collected, Gemini 1.5 Pro AI processed and structured the information. This AI-powered data extraction:

- Identified alternate and equivalent part numbers, ensuring a broader scope of data.

- Formatted the extracted information into structured files for better usability.

- Generated reports in CSV and Google Sheets, making data more accessible for analysis.

Reliable System for Long-Term Use

To maintain accuracy and consistency, the system was built to:

- Adjust to changing website structures, reducing the need for constant manual updates.

- Bypass obstacles like logins, captchas, and dynamic content without compromising reliability.

- Require minimal manual intervention while being adaptable to increasing data demands.

Business Impact

By implementing this system, the client saw significant improvements in their workflow:

- Reduced manual data collection, lowering errors and saving valuable time.

- Faster data retrieval, enabling quicker responses to business needs.

- Structured insights made data easier to analyze, improving decision-making.

- A system built to handle growing data needs, ensuring continued usability.

Key Insights

- Reducing manual processes saves time and minimizes errors.

- AI-powered structuring makes data more practical for analysis.

- Addressing website restrictions ensures reliable data extraction over time.

- Systems that adapt to growing data requirements remain useful in the long run.

Automated E-commerce Product Scraping for Market Insights

The Client Background: What We’re Working With

The client operates in the retail sector, where real-time access to structured competitor data plays a critical role in pricing strategy and catalog decisions. For businesses aiming to stay competitive, using web scraping for e-commerce market research offers a distinct advantage.

Previously, product data was gathered manually, which led to inefficiencies, outdated insights, and incomplete records. Team members had to revisit product pages repeatedly to record changing prices and availability. This approach was both labour-intensive and error-prone, creating delays in internal processes and limiting visibility into competitor activity.

A high-volume, automated solution was required to scale efforts and extract timely market insights from web scraping, not just once, but on a recurring basis.

Challenges & Objectives

The following were the challenges we identified during the execution of the project:

Challenges

- Navigating deep and paginated category hierarchies

- Dealing with inconsistent templates and missing fields

- Bypassing anti-bot mechanisms without site bans or CAPTCHAs

Objectives

- Develop a reliable ecommerce scraper for over 650,000 records

- Store outputs in a clean CSV format for immediate integration

- Maintain structural integrity even when fields (like price or currency) are missing

- Enable scalable, undetected scraping using rotating proxies and headers

- Prepare data in a format suitable for catalog updates and price monitoring dashboards

Conclusion

Relu Consultancy’s solution demonstrated how a purpose-built ecommerce scraper can support high-volume e-commerce data extraction without triggering anti-scraping systems. With clean, structured outputs and built-in flexibility, the system enables businesses to generate accurate, up-to-date product datasets for intelligence and operational needs.

By adopting e-commerce product scraping for market insights, the client now has the infrastructure for smarter catalog management, competitive price tracking, and ongoing business intelligence. The system is already prepared for recurring runs and can scale to support additional categories or new platforms in the future.

Approach & Implementation

Let’s get into the approach we crafted for the client:

Website Analysis

The team first analyzed the platform’s structure to identify key product and category URLs. Pagination rules were documented, and field-level consistency was assessed. Field mapping allowed the developers to account for variations in the placement of prices, descriptions, and availability statuses. This groundwork ensured accurate e-commerce product scraping for market insights.

Scraping Development

The scraping tool was built in Python using BeautifulSoup for parsing HTML. It extracted:

- Product names

- Prices and currencies

- Availability

- Product descriptions

Each page was processed with logic that handled deep pagination and multiple templates. Custom handling was added for fields that were sometimes missing or placed inconsistently across product listings. This allowed the system to deliver structured e-commerce data extraction output without redundancy or confusion during downstream analysis.

Anti-Scraping Strategy

To achieve real-time e-commerce data scraping without interruption, the system used:

- Rotating user agents, headers, and IPs via proxy services

- Incidental delays between requests to simulate human browsing patterns

- Graceful error handling and retry logic to account for intermittent blocks or broken links

These steps helped avoid blocks while maximizing throughput. Additionally, session tracking was omitted to prevent the scraper from being flagged as a bot due to repetitive access patterns.

Execution & Delivery

The system successfully scraped 642,000+ unique product listings. Any records missing essential data, like price or currency, were clearly marked for transparency. The CSV output included flags for null or inconsistent fields, enabling downstream teams to filter or correct records as needed.

The final data was structured and shared via a Google Drive link for immediate access and review. The format supported direct import into analytics dashboards and catalog systems.

Benefits

Implementation of the e-commerce product scraping system gave the client many clear advantages:

Time Savings: It eliminated the need for manual data collection leaving the team free to focus on analysis instead of repetitive tasks.

Real-time Insights: The system enabled faster price comparisons and inventory checks against rivals.

Higher Accuracy: The system ensured higher accuracy by reducing the errors that happened due to manual entry and inconsistent data gathering.

Scalability. The system can handle thousands of product records while requiring minimum manual intervention.

Structured Output: It delivers clean CSV files that are ready for dashboards, analytics, and catalog systems.

Operational Efficiency: Apart from freeing up internal resources, it led to improvement in decision making speed.

Automated Catalog Management: Products could be listed, updated, or removed automatically without requiring any human input.

Valuable Market Insights

The system did not just extract data. Instead, it created a foundation for actionable insights. Some of the valuable market intelligence gained included the following:

- Price Benchmarking: The ability to compare own product prices with competitors across various categories.

- Inventory tracking: Monitoring products that competitors frequently ran out of to reveal demand surges.

- Trend Identification: Spotting new product launches early and tracking how fast they gain traction.

- Promotional Analysis: Detecting discount cycles, seasonal offers, and bundles used by competitors.

- Category gaps: Identifying product categories that had high demand but limited competitor presence.

- Consumer demand signals: Frequent stockouts and price increases gave indirect signals about fast-moving items.

Besides helping the client adjust pricing strategies, these insights empowered them to improve catalog decisions and identify untapped market opportunities.

Use Cases for Various Industries

While this project was created for the retail sector, the principles of automated large-scale data extraction may be applied to other industries as well. This solution can address diverse business needs by adapting the scraping logic, data fields, and antiblocking strategies:

Ecommerce & Retail: Scraping competitor product catalogs, tracking daily or seasonal price changes, and identifying new entrants. Apart from allowing retailers to adjust prices quickly, it lets them manage inventory more efficiently, and launch targeted campaigns.

Travel & Hospitality: Gathering hotel rates, flight prices, package details, and seasonal availability from multiple booking websites. It allows agencies to offer competitive pricing, optimize packages, and respond to changing demand patterns in real time.

Real Estate: Extracting property listings, rental prices, neighborhood trends, and project availability from real estate websites. This can be used by agencies and investors to track market fluctuations, analyse demand, and generate comparative reports for clients.

Automotive: Monitoring car dealership websites for new and used vehicle listings, spare parts availability, and latest offers. Besides competitive analysis, it supports pricing intelligence, and stock management for dealers and resellers.

Consumer Electronics: Tracking online availability, price drops, and reviews for gadgets and appliances. It can be used by brands to identify new launches by competitors, adjust promotional campaigns, or ensure price parity across marketplaces.

Market Research & Analytics Firms: Aggregating data from multiple sectors for creating market reports, forecasts, and performance benchmarks. Automated scraping ensures that insights are based on the most recent and accurate information.

Healthcare & Medical Supplies: Monitoring online suppliers for essential medical equipment, pharmaceuticals, and consumables. It can be used by hospitals and procurement teams to secure better deals and maintain uninterrupted supply.

Key Takeaways

At the end of the project, the following were the main lessons relevant for businesses and decision makers:

- Time and efforts saved: Automating product data collection eliminates the need for manual research. It also allows the team to focus on smarter tasks.

- Better Market Awareness: Businesses can stay ahead with regular access to competitor prices, stock levels, and promotions.

- Accurate Insights: While clean and accurate data reduces errors, it also supports confident decision making.

- Scalability Matters: A system which can handle hundreds of thousands of products ensures growth without any extra effort.

- Actionable Outcomes: Beyond information collection, the solution provided practical insights for pricing, catalog updates, and market opportunities.

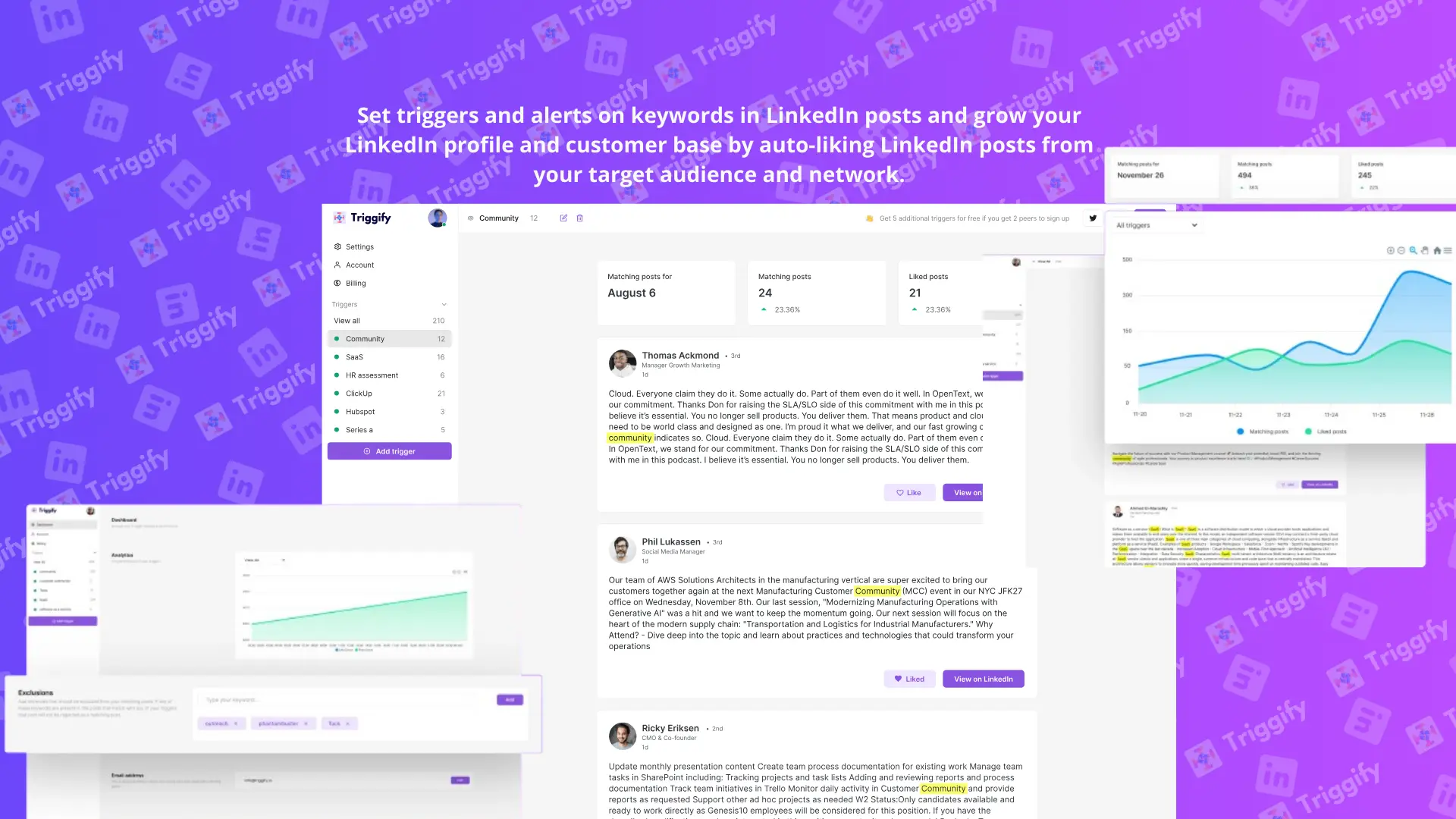

HP Social Tracker

Client Background

HP is a well-recognized technology company that makes and sells computers and printers. Its business is divided into two main segments: Personal Systems and Printing. Through its personal systems segment, the company sells desktops, laptops, and work stations, catering to both individual consumers and businesses.

In 2015, the original Hewlett Packard company split into two entities: HP and HPE. HP Inc. retained the computer and printer business. The company serves millions of customers globally and has established a solid distribution network. It offers its products through brand stores, online, and directly to businesses.

As a global technology brand, HP continually looks for ways to extend its brand reach through authentic initiatives. Employees and channel partners are often the most credible advocates of a brand, and HP aimed to leverage this network to amplify its message organically.

However, tracking social media participation across multiple platforms manually was proving to be unsustainable and inefficient. HP needed a scalable employee advocacy platform that could automate data collection, standardize scoring, and visualize performance in real time to maintain momentum throughout the campaign period.

Challenges & Objectives

Before diving into the solutions, let’s understand the key challenges we faced and the main objectives we aimed to achieve through this project:

Challenges

Multi-Platform Complexity: The project required capturing posts from three different social media platforms, each with its own set of data structures, APIs and privacy restrictions. Consistent data collection and validation became technically challenging due to this.

Content Quality Control: Filtering out irrelevant posts, duplicates, and unrelated hashtags became challenging at scale. Users experimented with different content formats making the task all the more complex.

Motivation and Fairness: It was critical to maintain trust in the scoring system. Participation and overall engagement could be reduced by any perceptions of bias or due to errors.

Scalability Issues: Besides being time consuming, manual monitoring was not sustainable for the campaign’s global reach. An automated system was required to handle large volumes of content daily without errors.

Engagement Fatigue: Another major concern was to sustain participant interest over the entire campaign duration. Employees and partners could lose motivation without visible recognition and timely updates.

Data Transparency for Stakeholders: The marketing team needed accurate and real-time insights into campaign performance. Manual spreadsheets could cause delays and also lacked visualisation for quick decision making.

Objectives

Automate Campaign Monitoring: The project required the creation of a system that could seamlessly capture, validate, and track hashtag based posts across the three social media platforms: Facebook, Instagram, and LinkedIn.

Standardise Scoring: A transparent, rules-based scoring matrix was needed to ensure fairness and encourage quality over quantity.

Promote Healthy Competition: A leaderboard , which is updated in real time, motivating employees and partners with gamification and recognition.

Deliver Actionable Insights: Another important objective was to provide the HP marketing team with a comprehensive dashboard that offered data visualization, performance tracking, and campaign reporting.

Ensure Scalability and Reusability: The project also required designing a framework, which could be adapted for future campaigns, other regions, or extended to various internal initiatives.

Strengthen Advocacy Culture: Another critical objective was to encourage employees and channel partners to become brand advocates. This would amplify HP’s reach through their trusted networks.

Improve Efficiency: We needed to minimize manual effort and reduce dependency on human intervention such that the focus remained on strategy and engagement.

Conclusion

Relu Consultancy’s end-to-end solution enabled HP to run a high-impact social media engagement campaign that combined automation, transparency, and user motivation. The system successfully tracked and rewarded internal and partner contributions across multiple platforms, transforming what would have been a manual and error-prone task into a streamlined, data-driven campaign.

By promoting brand content through trusted networks and recognizing active contributors in real time, HP was able to increase its organic visibility and foster stronger community involvement. The campaign set a benchmark of social media gamification for future employee and partner-driven marketing initiatives.

Approach & Implementation

Here is the step-by-step strategy and actions taken to successfully plan, execute, and complete the project:

Hashtag & Platform Criteria

The project began by working closely with HP to define the list of approved campaign hashtags. These would serve as the primary filters for identifying eligible content. The system was designed to monitor posts on Facebook, Instagram, and LinkedIn, using keyword-matching logic to verify inclusion of valid hashtags.

Automated Scraper System

Relu Consultancy developed a platform-specific scraping system that collected social media posts once every 24 hours. The scraper was customized to handle each platform’s formatting and privacy constraints. It extracted relevant data, including:

- Date and time of the post

- Post content

- Associated hashtags

- Poster identity (when available)

The automation eliminated the need for manual tracking and reduced the chances of human oversight, functioning as a social media automation dashboard for enterprise brands.

Scoring Logic & Matrix

A flexible point system was introduced to quantify participant contributions. For instance, a valid post on Instagram might earn a specific number of points, while an additional post on LinkedIn would be rewarded similarly.

Points were calculated based on the number of valid posts per participant and were automatically updated in the system. This operated as a part of an employee advocacy tracking system with real-time scoring.

Only posts that met predefined criteria were awarded points, ensuring quality control and preventing exploitation through repetitive or unrelated content.

Dashboard & Leaderboard

Relu Consultancy delivered two key visual components:

- Admin Dashboard for HP: This interface allowed HP’s marketing team to review participation statistics, post volumes, and platform-specific breakdowns.

- Leaderboard for Participants: A public-facing interface ranked contributors by total score, helping build excitement and encouraging healthy competition. The leaderboard was refreshed daily, reflecting the latest validated activity.

Together, these tools supported HP’s vision for brand engagement automation with as much transparency as possible.

Maintenance & Updates

Daily monitoring and updates were central to the campaign’s success. The system was configured to scrape new data every morning, validate it against scoring rules, and refresh both dashboards. Feedback loops from HP’s team were used to refine filtering logic and address edge cases, such as duplicate hashtags or platform-specific anomalies.

Results & Outcomes

The following results highlight the key achievements and impact of the project

- Over the course of the campaign, the system tracked thousands of valid posts across the three platforms, all without manual intervention

- Participation among internal employees and channel partners increased significantly due to real-time recognition and visibility.

- The gamified structure and transparent leaderboard fostered sustained engagement, with several participants competing actively until the campaign’s end.

- HP was able to access a clean, structured dataset for performance reporting and future campaign planning.

Benefits

Here are the main benefits of the HP Social Tracker system:

- Real-time engagement tracking: Daily updates incentivize participation and keep momentum high.

- Scalable framework: The system can be reused for future campaigns or adapted for other internal initiatives.

- Custom dashboards: Tailored interfaces for admins and participants enable transparency and control.

- Automation reduces overhead: Manual effort is minimized, freeing teams to focus on strategy and community building.

- Data-driven insights: Structured reporting enables better evaluation of campaign effectiveness and future planning.

These advantages collectively ensured that HP's campaign was not only successful in the short term, but also established a scalable model for future engagement initiatives.

Use Cases by Industry

The HP Social Tracker can support different industries based on their specific communication and engagement goals:

Technology & IT Services:

- Track employee advocacy and engagement during product launches or internal branding initiatives.

- Encouraging partners and resellers to share case studies, client success stories with the aim to strengthen market credibility.

Retail:

- Motivate in-store employees and franchise partners to share promotional campaigns, seasonal sales, and store opening updates.

- Showcase customer experiences and product demonstrations through employee generated content.

Education:

- Boost visibility for campus events, admission campaigns, and alumni stories by engaging students and staff.

- Encouraging student ambassadors to share experiences during placement drives or academic competitions.

Healthcare:

- Promoting awareness campaigns for health checkups, vaccination drives, or hospital initiatives through employee and doctor advocacy.

- Encouraging staff to highlight success stories, CSR activities, or patient education content.

Finance:

- Strengthening internal communications and brand image by gamifying content sharing during key business quarters.

- Encouraging employees to share updates about financial literacy programs, investment products, or corporate achievements.

Hospitality & Travel:

- Encouraging hotel staff, travel agents, and tour operators to share guest experiences, property highlights, and cultural activities.

- Promoting seasonal offers, new packages, and local experiences through employee advocacy.

Manufacturing & Industrial:

- Showcasing factory operations, safety initiatives, and CSR activities with the aim to build transparency and trust.

- Enabling distributors and channel partners to share updates on product launches and training workshops.

Non-Profit & NGOs:

- Mobilize volunteers and staff to share stories from fieldwork and fundraising events.

- Using the leaderboard concept to motivate communities to amplify awareness drives.

Government & Public Sector:

- Encouraging employees to share updates related to national campaigns, digital initiatives, or public outreach programs.

- Use advocacy tracking during awareness weeks to measure citizen engagement.

These examples show how the tracker can be adapted for real-world engagement, regardless of sector or campaign focus.

Key Takeaways

Here are the main insights and lessons from the project:

- Gamification Works: Introducing a leaderboard and reward structure was effective in motivating participants and sustaining activity.

- Automation Was Essential: Without an automated scraper and scoring engine, real-time tracking at this scale would have been unmanageable.

- Visibility Increases Engagement: Participants were more likely to contribute consistently when they could track their ranking and receive immediate feedback.

- Scalability Is Now Possible: The system built by Relu Consultancy can be reused and scaled for future campaigns or extended to other regions and user groups.

Zapier Email Parser for Automated Lead Follow-Up Workflows

The Client Background

The client manages property inquiries for real estate listings. Each incoming inquiry had to be responded to in a timely, relevant, and personalized manner. Before this project, lead data was manually extracted and sorted, a process prone to delays, errors, and duplicate follow-ups.

To scale their outreach while maintaining a personal touch, the client needed a way to automate lead follow-up emails using Zapier while minimizing manual intervention.

Challenges and Objectives with the Project

The following are some challenges that we ran into during execution:

Challenges

-The default Zapier email parser struggled with consistently extracting data from semi-structured inquiry emails.

Without a validation layer, follow-up emails risked being sent multiple times to the same lead.

The process had to maintain personalization while scaling automated responses.

Objectives

Improve parsing logic to extract leads from emails with Zapier Email Parser

Validate each new lead against a live database before sending communication

Use Zapier lead automation to deliver booking links and tailored messages without delays

Conclusion

Relu Consultancy delivered a reliable, scalable solution for Zapier lead automation that improved how the client responded to property inquiries. The system combined email parsing, lead validation, and tailored communication to offer fast, professional responses without human oversight.

By using email automation tools and enhancing the Zapier email parser, the client was able to reduce turnaround times, prevent errors, and improve lead conversion outcomes. The project stands as a model for how to automate lead follow-up emails using Zapier in a structured, error-free, and scalable manner.

Our Tailored Approach & Implementation

Here’s how we structured our approach:

Email Parsing & Extraction

The first step involved identifying emails with the subject line “New Interest.” Relu Consultancy developed a Python script that extended the Zapier email parser's functionality by applying pattern recognition to extract:

- Lead name

- Phone number

- Email address

- Property or building of interest

This combination of email automation tools and custom scripting offered more control over how the lead details were captured. It also provided a clear example of how to use Zapier Email Parser for lead management in real-world applications.

Filtering & Validation

Once lead details were extracted, the system checked whether a valid email address was present. If missing, the process was halted to avoid incomplete follow-ups. When an email was found, it was cross-checked with the client’s lead database.

This ensured that follow-up emails were only sent to new leads, preventing duplicate outreach and protecting the client’s credibility.

Automated Email Sending

After validation, the system triggered a personalized follow-up email containing a property tour booking link. The message used the extracted details to address the lead by name and reference the property of interest, keeping the tone relevant and human.

All new lead data was logged into the database to support future validation, analytics, or CRM usage. This process showed how to automate lead follow-up emails using Zapier while preserving a professional tone.

Results & Outcomes

Upon implementation, we observed the following key results:

- Lead data was accurately extracted using enhanced logic layered over the Zapier email parser.

- Real-time follow-up emails were delivered without delay or duplication.

- The manual effort was drastically reduced, freeing up internal resources.

- The client improved their response time and credibility with prospective buyers.

- The project demonstrated the effective use of Zapier lead automation to manage high volumes of inquiries.

Benefits:

Implementation of this automated lead follow up system led to several measurable and strategic benefits:

Faster Lead Response: The client could respond to inquiries instantly by automating parsing and follow up. It significantly improved the chances of engaging qualified leads

Reduced Manual Effort: The team did not need to spend hours extracting and validating lead data. It freed them up for higher value tasks, such as client relationship building.

Improved Accuracy: Errors while extracting contact details were minimised with enhanced parsing logic. It ensured messages reached the right people with the right context.

Elimination of Duplicate Outreach: The validation layer made sure that each lead received only one relevant follow-up. It helped to protect brand reputation and avoid customer frustration.

Personalized at Scale: The system maintained a human tone in all communications. It helps create trust and rapport without manual intervention.

Scalability: The automated process can handle growing enquiry volumes without affecting speed or quality, which supports the client’s long term growth.

Use Cases Across Industries:

This project was designed for a real estate business. However, the same approach can be adapted to multiple sectors:

E-Commerce: Extract customer inquiries automatically from order-related emails for sending personalized product recommendations or status updates.

Travel and Hospitality: Parse booking inquiries from email to send instant confirmations, itineraries, or upsell offers for tours and activities.

Education: For processing student admission or course inquiries from email to send tailored program details with application links.

Healthcare: Extract patient queries from email and send appointment booking links or relevant resources.

Professional Services: Capture potential client inquiries to automate responses with service details, booking links, or case studies.

Event Management: Parse event registration emails for sending immediate confirmation, schedules, and ticket details.

Key Takeaways

The following were our core learnings and takeaways from the undertaking:

- Using email automation tools alongside custom Python scripting extends the value of the Zapier email parser.

- Real-time validation protects against redundant outreach and maintains a consistent lead experience.

- This project is a clear example of how to use Zapier Email Parser for lead management in real estate or similar industries.

- By combining automation with personalization, it’s possible to extract leads from emails with Zapier Email Parser and act on them intelligently.

Automated E-commerce Product Scraping for Market Insights

Project Overview

This project focused on building an e-commerce scraper capable of extracting over 650,000 product records from a large online retail platform. The solution was designed to support catalog management, pricing intelligence, and market insights from web scraping.

By automating data collection, the client moved away from manual efforts to a scalable system tailored for e-commerce data extraction.

The Client Background: What We’re Working With

The client operates in the retail sector, where real-time access to structured competitor data plays a critical role in pricing strategy and catalog decisions. For businesses aiming to stay competitive, using web scraping for e-commerce market research offers a distinct advantage.

Previously, product data was gathered manually, which led to inefficiencies, outdated insights, and incomplete records. Team members had to revisit product pages repeatedly to record changing prices and availability. This approach was both labour-intensive and error-prone, creating delays in internal processes and limiting visibility into competitor activity.

A high-volume, automated solution was required to scale efforts and extract timely market insights from web scraping, not just once, but on a recurring basis.

Challenges & Objectives

The following were the challenges we identified during the execution of the project:

Challenges

- Navigating deep and paginated category hierarchies

- Dealing with inconsistent templates and missing fields

- Bypassing anti-bot mechanisms without site bans or CAPTCHAs

Objectives

- Develop a reliable ecommerce scraper for over 650,000 records

- Store outputs in a clean CSV format for immediate integration

- Maintain structural integrity even when fields (like price or currency) are missing

- Enable scalable, undetected scraping using rotating proxies and headers

- Prepare data in a format suitable for catalog updates and price monitoring dashboards

Conclusion

Relu Consultancy’s solution demonstrated how a purpose-built ecommerce scraper can support high-volume e-commerce data extraction without triggering anti-scraping systems. With clean, structured outputs and built-in flexibility, the system enables businesses to generate accurate, up-to-date product datasets for intelligence and operational needs.

By adopting e-commerce product scraping for market insights, the client now has the infrastructure for smarter catalog management, competitive price tracking, and ongoing business intelligence. The system is already prepared for recurring runs and can scale to support additional categories or new platforms in the future.

Approach & Implementation

Let’s get into the approach we crafted for the client:

Website Analysis

The team first analyzed the platform’s structure to identify key product and category URLs. Pagination rules were documented, and field-level consistency was assessed. Field mapping allowed the developers to account for variations in the placement of prices, descriptions, and availability statuses. This groundwork ensured accurate e-commerce product scraping for market insights.

Scraping Development

The scraping tool was built in Python using BeautifulSoup for parsing HTML. It extracted:

- Product names

- Prices and currencies

- Availability

- Product descriptions

Each page was processed with logic that handled deep pagination and multiple templates. Custom handling was added for fields that were sometimes missing or placed inconsistently across product listings. This allowed the system to deliver structured e-commerce data extraction output without redundancy or confusion during downstream analysis.

Anti-Scraping Strategy

To achieve real-time e-commerce data scraping without interruption, the system used:

- Rotating user agents, headers, and IPs via proxy services

- Incidental delays between requests to simulate human browsing patterns

- Graceful error handling and retry logic to account for intermittent blocks or broken links

These steps helped avoid blocks while maximizing throughput. Additionally, session tracking was omitted to prevent the scraper from being flagged as a bot due to repetitive access patterns.

Execution & Delivery

The system successfully scraped 642,000+ unique product listings. Any records missing essential data, like price or currency, were clearly marked for transparency. The CSV output included flags for null or inconsistent fields, enabling downstream teams to filter or correct records as needed.

The final data was structured and shared via a Google Drive link for immediate access and review. The format supported direct import into analytics dashboards and catalog systems.

Benefits

Implementation of the e-commerce product scraping system gave the client many clear advantages:

Time Savings: It eliminated the need for manual data collection leaving the team free to focus on analysis instead of repetitive tasks.

Real-time Insights: The system enabled faster price comparisons and inventory checks against rivals.

Higher Accuracy: The system ensured higher accuracy by reducing the errors that happened due to manual entry and inconsistent data gathering.

Scalability. The system can handle thousands of product records while requiring minimum manual intervention.

Structured Output: It delivers clean CSV files that are ready for dashboards, analytics, and catalog systems.

Operational Efficiency: Apart from freeing up internal resources, it led to improvement in decision making speed.

Automated Catalog Management: Products could be listed, updated, or removed automatically without requiring any human input.

Valuable Market Insights

The system did not just extract data. Instead, it created a foundation for actionable insights. Some of the valuable market intelligence gained included the following:

- Price Benchmarking: The ability to compare own product prices with competitors across various categories.

- Inventory tracking: Monitoring products that competitors frequently ran out of to reveal demand surges.

- Trend Identification: Spotting new product launches early and tracking how fast they gain traction.

- Promotional Analysis: Detecting discount cycles, seasonal offers, and bundles used by competitors.

- Category gaps: Identifying product categories that had high demand but limited competitor presence.

- Consumer demand signals: Frequent stockouts and price increases gave indirect signals about fast-moving items.

Besides helping the client adjust pricing strategies, these insights empowered them to improve catalog decisions and identify untapped market opportunities.

Use Cases for Various Industries

While this project was created for the retail sector, the principles of automated large-scale data extraction may be applied to other industries as well. This solution can address diverse business needs by adapting the scraping logic, data fields, and antiblocking strategies:

Ecommerce & Retail: Scraping competitor product catalogs, tracking daily or seasonal price changes, and identifying new entrants. Apart from allowing retailers to adjust prices quickly, it lets them manage inventory more efficiently, and launch targeted campaigns

Travel & Hospitality: Gathering hotel rates, flight prices, package details, and seasonal availability from multiple booking websites. It allows agencies to offer competitive pricing, optimize packages, and respond to changing demand patterns in real time.

Real Estate: Extracting property listings, rental prices, neighborhood trends, and project availability from real estate websites. This can be used by agencies and investors to track market fluctuations, analyse demand, and generate comparative reports for clients.

Automotive: Monitoring car dealership websites for new and used vehicle listings, spare parts availability, and latest offers. Besides competitive analysis, it supports pricing intelligence, and stock management for dealers and resellers.

Consumer Electronics: Tracking online availability, price drops, and reviews for gadgets and appliances. It can be used by brands to identify new launches by competitors, adjust promotional campaigns, or ensure price parity across marketplaces.

Market Research & Analytics Firms: Aggregating data from multiple sectors for creating market reports, forecasts, and performance benchmarks. Automated scraping ensures that insights are based on the most recent and accurate information.

Healthcare & Medical Supplies: Monitoring online suppliers for essential medical equipment, pharmaceuticals, and consumables. It can be used by hospitals and procurement teams to secure better deals and maintain uninterrupted supply.

Key Takeaways

At the end of the project, the following were the main lessons relevant for businesses and decision makers:

- Time and efforts saved: Automating product data collection eliminates the need for manual research. It also allows the team to focus on smarter tasks.

- Better Market Awareness: Businesses can stay ahead with regular access to competitor prices, stock levels, and promotions.

- Accurate Insights: While clean and accurate data reduces errors, it also supports confident decision making.

- Scalability Matters: A system which can handle hundreds of thousands of products ensures growth without any extra effort.

- Actionable Outcomes: Beyond information collection, the solution provided practical insights for pricing, catalog updates, and market opportunities.

Fake Review Detection & Pattern Analysis for Amazon Listings

Project Overview

Did any online review confuse you? That’s what we encountered on Amazon to identify as a fake review. These reviews mislead buyers, damage seller reputations, and distort product rankings. It’s a serious threat to sellers relying on authentic customer feedback.

Our client, a mid-sized Amazon seller with listings across multiple categories, noticed a sudden drop in product credibility. Their listings were receiving suspicious reviews that didn’t match actual customer experiences. Some reviews were irrelevant, while others promoted unrelated products. The client wanted to comprehend the problem, put a full stop to the damage and prevent future attacks.

We took on the challenge with a clear goal: identify fake reviews, analyse manipulation prototypes, and build a scalable system for reporting and prevention.

The Challenges

The client faced several interconnected problems:

- Suspicious Review Volume: A sharp increase in 1-star reviews with vague or irrelevant content. Many reviews appeared automated or copied from other listings.

- Manual Detection Limitations: The client’s internal team was unable to keep up the pace. Identifying coordinated attacks manually was slow and often inaccurate.

- Lack of Structured Evidence: Even when fake reviews were spotted, there was no organised data to present to Amazon for escalation.

- Hidden or Missing Reviews: Some reviews were visible only in certain regions or devices. Others disappeared after being flagged, making it hard to track patterns.

- Scalability Issues: The problem wasn’t limited to one product. Multiple listings across categories were affected, raising a doubt about a competitor’s involvement.

It was evident that the client needed a robust, data-driven approach to detect and respond to review manipulation.

To Sum Up

This project highlights the power of structured review analysis. Fake reviews are more than dangerous. They damage brand credibility and customer trust. By combining manual insights with automation planning, we helped the client uncover manipulation, protect their listings, and prepare for future challenges.

Relu’s Promise: We don’t just solve problems! We build systems that protect your brand, scale with your growth, and earn customer trust.

Our Approach

We broke the solution into five key components:

Review Pattern Analysis

We started by analysing 1-star reviews. Many had repeated phrases, poor grammar, or promoted adult products. These weren’t relevant to the client’s listings. These reviews were likely generated by bots or coordinated groups.

We looked for patterns in timing, language, and reviewer behaviour. Reviews that appeared in bursts, used similar wording, or came from accounts with no purchase history were flagged.

Reviewer Profiling

Next, we studied the reviewer accounts. Some exhibited bot-like activity—posting multiple reviews in a short time, across unrelated categories. Others had conflicting review histories, praising one product while criticising a similar one from the client.

We also found profiles linked to competitor products. These reviewers often left negative feedback on the client’s listings and positive reviews on rival items. We flagged these accounts and documented their activity.

Impact Mapping

We tracked how fake reviews affected the client’s listings. Products with high volumes of suspicious reviews ended in poor visibility, conversion rates, and even lost Amazon badges like “Best Seller.”

We mapped these impacts across categories to understand how manipulation spread. This helped us identify which products were most vulnerable and which competitors were likely involved.

Structured Reporting

To support escalation, we built a detailed dataset. It included:

- Reviewer metadata (account age, review history, purchase status)

- Behavioural flags (repetition, timing, sentiment anomalies)

- Product segmentation (affected listings, categories, and review clusters)

- Hyperlinked references to each flagged review and profile

We created a clear and organised report that Amazon’s support team could easily review. It helped the client raise complaints and request the removal of fake reviews.

Automation Exploration

Finally, we proposed an AI-based detection framework. This system would:

- Analyse keywords and sentiment in reviews

- Detect overlaps in reviewer behaviour across listings

- Identify hidden or region-specific reviews

- Send real-time alerts if suspicious patterns appear

- This blueprint laid the foundation for future automation, making review monitoring faster and more reliable.

What Made Our Solution Different

Our approach stood out for four key reasons:

The Results We Achieved

The impact was clear and measurable:

- The client could flag and report fake reviews with confident.

- Amazon accepted the escalation and began investigating the flagged profiles

- Product credibility improved, and buyer trust returned

- Revenue loss from manipulated listings was reduced

- The client gained insights into competitor tactics and targeting patterns

- Reviewing reliability scores helped guide catalogue decisions and future product launches

These results proved that structured analysis and smart reporting could transform a complex problem into a manageable process.

Key Benefits & Impact

- Detects fake reviews and suspicious reviewer behaviour.

- Spots harmful content patterns across product listings.

- Helps sellers report fake reviews to Amazon with solid evidence.

- Builds a system to monitor future review attacks.

- Protects brand reputation from coordinated review manipulation.

- Reduces revenue loss caused by misleading reviews.

- Improves buyer trust by removing harmful content early.

- Reveals competitor tactics and targeting strategies.

- Supports smarter catalogue decisions using review reliability scores.

- Prepares for AI tools that detect fake reviews in real time.

Use Cases Across Industries & Domains

This solution works beyond Amazon sellers. It helps many types of businesses:

E-commerce Brands

- Protect product listings from fake reviews.

- Improve customer trust and sales performance.

Hospitality & Travel

- Detect fake hotel or tour reviews.

- Maintain reputation across booking platforms.

App Developers

- Spot fake ratings and reviews on app stores.

- Prevent manipulation of app rankings.

Healthcare Providers

- Monitor patient feedback for authenticity.

- Avoid reputational damage from false reviews.

Online Education Platforms

- Ensure course reviews are genuine.

- Build trust with students and educators.

SaaS & B2B Services

- Track client feedback across review sites.

- Use insights to improve service quality and retention.

Business Owners

- Understand how competitors use fake reviews.

- Make informed decisions using review data.

Final Delivery & Next Steps

We delivered two key assets:

- A Google Sheet with reviewer profiles, flagged reviews, and behavioural insights

- A PDF report summarising findings, patterns, and escalation-ready documentation

The client is now equipped to take action, either by escalating to Amazon or making internal decisions about product listings.

Automating Lead Follow-Ups with Zapier Email Parsing

Introduction

An automated system was built to improve how incoming property inquiry emails were handled. Using Python scripting and email automation tools, the solution improved the Zapier email parser to accurately pull lead information such as name, phone number, and building name. It then sent custom follow-up messages while avoiding duplicate communication. This approach helped the client connect with potential customers faster, with better accuracy and a more personal touch.

Client Background

The client manages property inquiries for real estate listings. Each incoming inquiry had to be responded to in a timely, relevant, and personalized manner. Before this project, lead data was manually extracted and sorted, a process prone to delays, errors, and duplicate follow-ups.

To scale their outreach while maintaining a personal touch, the client needed a way to automate lead follow-up emails using Zapier while minimizing manual intervention.

Challenges and Objectives with the Project

The following are some challenges that we ran into during execution:

Challenges

- The default Zapier email parser struggled with consistently extracting data from semi-structured inquiry emails.

- Without a validation layer, follow-up emails risked being sent multiple times to the same lead.

- The process had to maintain personalization while scaling automated responses.

Objectives

- Improve parsing logic to extract leads from emails with Zapier Email Parser

- Validate each new lead against a live database before sending communication

- Use Zapier lead automation to deliver booking links and tailored messages without delays

Conclusion

Relu Consultancy delivered a reliable, scalable solution for Zapier lead automation that improved how the client responded to property inquiries. The system combined email parsing, lead validation, and tailored communication to offer fast, professional responses without human oversight.

By using email automation tools and enhancing the Zapier email parser, the client was able to reduce turnaround times, prevent errors, and improve lead conversion outcomes. The project stands as a model for how to automate lead follow-up emails using Zapier in a structured, error-free, and scalable manner.

Our Tailored Approach & Implementation

Here’s how we structured our approach:

Email Parsing & Extraction

The first step involved identifying emails with the subject line “New Interest.” Relu Consultancy developed a Python script that extended the Zapier email parser's functionality by applying pattern recognition to extract:

- Lead name

- Phone number

- Email address

- Property or building of interest

This combination of email automation tools and custom scripting offered more control over how the lead details were captured. It also provided a clear example of how to use Zapier Email Parser for lead management in real-world applications.

Filtering & Validation

Once lead details were extracted, the system checked whether a valid email address was present. If missing, the process was halted to avoid incomplete follow-ups. When an email was found, it was cross-checked with the client’s lead database.

This ensured that follow-up emails were only sent to new leads, preventing duplicate outreach and protecting the client’s credibility.

Automated Email Sending

After validation, the system triggered a personalized follow-up email containing a property tour booking link. The message used the extracted details to address the lead by name and reference the property of interest, keeping the tone relevant and human. All new lead data was logged into the database to support future validation, analytics, or CRM usage. This process showed how to automate lead follow-up emails using Zapier while preserving a professional tone.

Results & Outcomes

Upon implementation, we observed the following key results:

- Lead data was accurately extracted using enhanced logic layered over the Zapier email parser.

- Real-time follow-up emails were delivered without delay or duplication.

- The manual effort was drastically reduced, freeing up internal resources.

- The client improved their response time and credibility with prospective buyers.

- The project demonstrated the effective use of Zapier lead automation to manage high volumes of inquiries.

Benefits:

Implementation of this automated lead follow up system led to several measurable and strategic benefits:

Faster Lead Response: The client could respond to inquiries instantly by automating parsing and follow up. It significantly improved the chances of engaging qualified leads

Reduced Manual Effort: The team did not need to spend hours extracting and validating lead data. It freed them up for higher value tasks, such as client relationship building.

Improved Accuracy: Errors while extracting contact details were minimised with enhanced parsing logic. It ensured messages reached the right people with the right context.

Elimination of Duplicate Outreach: The validation layer made sure that each lead received only one relevant follow-up. It helped to protect brand reputation and avoid customer frustration.

Personalized at Scale: The system maintained a human tone in all communications. It helps create trust and rapport without manual intervention.

Scalability: The automated process can handle growing enquiry volumes without affecting speed or quality, which supports the client’s long term growth.

Use Cases Across Industries:

This project was designed for a real estate business. However, the same approach can be adapted to multiple sectors:

E-Commerce: Extract customer inquiries automatically from order-related emails for sending personalized product recommendations or status updates.

Travel and Hospitality: Parse booking inquiries from email to send instant confirmations, itineraries, or upsell offers for tours and activities.

Education: For processing student admission or course inquiries from email to send tailored program details with application links.

Healthcare: Extract patient queries from email and send appointment booking links or relevant resources.

Professional Services: Capture potential client inquiries to automate responses with service details, booking links, or case studies.

Event Management: Parse event registration emails for sending immediate confirmation, schedules, and ticket details.

Key Takeaways

The following were our core learnings and takeaways from the undertaking:

- Using email automation tools alongside custom Python scripting extends the value of the Zapier email parser.

- Real-time validation protects against redundant outreach and maintains a consistent lead experience.

- This project is a clear example of how to use Zapier Email Parser for lead management in real estate or similar industries.

- By combining automation with personalization, it’s possible to extract leads from emails with Zapier Email Parser and act on them intelligently.

No-Code Web Scraping & Automation for Crypto Insights: A 2025 Toolkit

Overview

The cryptocurrency market is notoriously volatile, and keeping ahead of the trends is essential. But manually gathering data from multiple sources like exchanges, social media, and forums can be a major bottleneck.

In 2025, there’s a smarter way to stay informed. With no-code crypto data scraping and automation tools, you can simplify the data collection process and get real-time insights, all without writing a single line of code.

This solution is ideal for crypto and finance enthusiasts who want to streamline data collection and make quicker, more informed decisions in the ever-changing crypto market.

Manual Data Collection is Holding You Back

If you're still copying data from sites like CoinMarketCap, Twitter feeds, or crypto forums into spreadsheets, you're wasting precious time. The challenges with this approach are clear:

- Dispersed Data Sources: Market data is scattered across multiple platforms, making it hard to track trends in one place.

- No Easy Way to Filter or Analyze: Without automation, extracting meaningful patterns from raw data becomes a tedious task.

- Time-Consuming Workflows: Manual data entry slows you down and increases the likelihood of errors.

Instead of getting bogged down by manual tasks, consider using crypto data automation tools and setting up an automated solution to pull the data you need, when you need it.

Make Smarter Crypto Moves

No-code crypto data scraping tools are transforming the way we track, understand, and act on crypto market trends. By integrating crypto data visualization tools, you can easily track market trends and identify key patterns in real-time, helping you make more conscious trading decisions. Whether you're a trader, analyst, or content creator, a no-code toolkit helps you keep pace with the market and make better decisions.

Looking to automate your crypto data collection process? Relu Consultancy can create tailored solutions to help streamline your data gathering, so you can focus on making smarter, data-driven decisions.

Contact us today to get started.

Build a Centralized, No-Code Crypto Dashboard

By setting up a no-code crypto data scraping dashboard, you can pull data from multiple platforms and visualize trends effortlessly. Here's what a no-code setup allows you to do:

- Automate Cryptocurrency Insights: Gather data automatically from various crypto exchanges, social media platforms, and news sites.

- Create Custom Dashboards: Use tools like Google Sheets, Notion, or Airtable to visualize the data, filter trends, and spot changes in market sentiment or prices.

- Make Quick Decisions: With up-to-date insights and real-time crypto data extraction, you can act faster and with more confidence, whether you're trading, analyzing, or forecasting.

Setting Up Your No-Code Crypto Data Toolkit

Building a no-code crypto data toolkit is easier than you think. Here’s how you can set up your system:

- Choose Your Tools

You don’t need to be a developer to start. Look for crypto data automation tools that support the following:

- Web Scraping: Choose web scraping tools that can collect data from multiple platforms (e.g., Python, Scrapy, or more accessible platforms like Octoparse).

- Automation: Platforms like Zapier or Make (formerly Integromat) allow you to automate workflows without any coding.

- Dashboards: Tools like Google Sheets + Google Data Studio, Notion, or Airtable work great for creating a centralized hub for your crypto data.

- Set Up Web Scraping

Now, it’s time to set up web scraping for crypto analytics. You can pull price data, social media mentions, sentiment analysis, or news updates. Set the scraping frequency based on your needs: daily for general trends, hourly for real-time data. Many crypto market data scraping tools allow you to automate the process, so the data is always up-to-date.

- Automate Data Flows

Once the data is collected, the next step is automation. Use platforms like Zapier or Make to:

- Send scraped data directly into your chosen dashboard.

- Set up notifications or alerts based on price changes, news mentions, or social media sentiment shifts.

- Automatically tag or categorize new data to keep everything organized.

- Visualise Your Insights

Now that your data is flowing, integrate it into a no-code crypto dashboard tool. Focus on key metrics such as:

- Price trends

- Trading volume spikes

- Market sentiment scores

You can make use of filters to focus on specific coins, exchanges, or news sources, and set up custom reports to spot patterns easily.

What You Can Achieve

To get the most out of your no-code blockchain data tools, here are some tips:

- Eliminate Manual Data Entry: No more copying and pasting data from multiple sources into spreadsheets.

- Get Real-Time Updates: Stay on top of market movements and trends without delays.

- Save Time: Automate monotonous jobs to save up critical time for analysis and strategy.

- Make Smarter Decisions: With all your data in one place, you can make more informed decisions faster using automated crypto analytics platforms that provide real-time insights and trend analysis.

Tips for Success

To get the most out of your no-code crypto data toolkit, keep these tips in mind:

- Start Small: Focus on a few key data sources to avoid getting overwhelmed.

- Validate Data Regularly: Even with automation, it’s essential to ensure your data is accurate and reliable.

- Use Filters and Alerts: Set up automated filters to avoid information overload and get only the insights you need.

- Scale as You Grow: Once you’re comfortable, you can add more integrations and data sources to your toolkit.

Automated Image Pipeline for Training AI in Crop Health Diagnostics

Overview

This project focused on developing an end-to-end automated image processing pipeline to support AI model training in agriculture. The system was designed to collect and validate high-resolution images of crop diseases, nutrient deficiencies, pests, and fungi.

By automating data acquisition and applying filtering aligned with research practices, the client assembled a structured, consistent crop disease dataset for use in machine learning agriculture models aimed at plant health diagnostics.

Client Background

The client operates in the agritech AI sector and specializes in developing tools for AI crop diagnostics. They required a scalable and repeatable method to build a high-quality agricultural image dataset for machine learning.

Previously, image collection was handled manually, which introduced inconsistencies and slowed down dataset development. The new system needed to support image curation at scale while adhering to standards common in academic and applied AI contexts.

Challenges & Objectives

- Manual sourcing of agricultural images was inefficient, inconsistent, and unscalable

- Ensuring image quality and relevance without automated validation was time-intensive

- Lacking a consistent framework for data labeling, categorization, and audit trails

- Preparing a dataset that meets the quality standards expected in supervised learning workflows

Objectives

- Build an image scraping tool for agricultural AI training datasets

- Organize images into standardized categories (deficiencies, pests, diseases, fungi)

- Implement automated validation and deduplication using a research-aligned image filtering tool

- Provide metadata tracking and transparency through structured logging

- Enable scalable, continuous data collection via server-based deployment

Conclusion

Relu Consultancy delivered a scalable, research-informed solution that transformed fragmented image sourcing into a reliable and automated process. The final crop disease dataset enabled the client to accelerate AI model development with cleaner, labeled data that met training standards. By integrating scraping, relevance filtering, audit logging, and storage into a seamless workflow, the system laid a strong foundation for future work in AI crop diagnostics and machine learning agriculture.

Approach & Implementation

The following are the details of the approach that was taken:

Search Query Construction

The system began by defining detailed search queries mapped to common crop issues. These included terms like “rice leaf potassium deficiency” or “soybean rust symptoms.” Filters for image resolution (minimum 480p), location, and recent timeframes were applied to reflect the freshness and quality standards typical of AI training datasets.

SERP Scraping Module

Selenium and search engine APIs (Google, Bing, Yahoo) were used to retrieve image URLs, page sources, and metadata. A retry mechanism handled rate limits to ensure uninterrupted extraction. This module served as the core of the image scraping tool for agricultural AI training datasets and supported robust, high-volume collection.

Image Download and Storage

Image files were downloaded using blob and base64 methods, then stored in cloud repositories like Google Drive and AWS S3. A hierarchical folder structure categorized the images into deficiencies, pests, diseases, and fungi. This structure was built to support downstream tasks such as annotation, model validation, and class balancing, which are critical steps in ensuring model generalizability.

Quality and Relevance Filtering

An AI-based content validation layer, supported by perceptual hashing (phash), was used to detect and eliminate duplicates. Content relevance was assessed using predefined visual cues. Only images meeting clarity and context standards were retained. Filtered-out samples were logged for audit, promoting dataset transparency and adherence to data sanitization best practices. These steps helped preserve the consistency and usability of the plant health AI training data.

Metadata Logging with Google Sheets

A synchronized Google Sheets interface was used to log filenames, sources, categories, and filtering status. This created a live audit trail and helped align data science workflows with agronomic review processes. The traceability also simplified quality checks, dataset updates, and collaboration.

Server Deployment and End-to-End Testing

The entire system was deployed to a cloud server, enabling scheduled runs and continuous data collection. Each pipeline component, from search query execution to dataset export, was tested to ensure reliability and alignment with training data requirements in machine learning agriculture projects.

Results & Outcomes

The following were the outcomes of the project:

- The platform enabled automated, scalable collection of thousands of high-resolution crop images

- Images were consistently categorized, validated, and prepared for use in AI development

- Manual image vetting was eliminated, saving time and improving dataset preparation speed

- Images were organized into usable classes for model training

- Substandard and redundant images were filtered out before ingestion

- The system remained operational with minimal manual intervention

- Metadata logging improved dataset management and accountability

Key Takeaways

Given the direction of the project, the following key takeaways were identified:

- A structured pipeline combining automated image processing and content validation is essential for building reproducible AI datasets

- Using perceptual hashing and relevance scoring ensured dataset quality and reduced noise

- Metadata tracking supported review, debugging, and retraining workflows

- Aligning the pipeline with academic dataset practices supported long-term research and commercial deployment goals

Deal Monitoring Platform for Online Marketplaces

Overview

This project focused on building an automated deal discovery platform for online marketplaces that helped users track listings, evaluate pricing trends, and identify promising deals. The system automates listing analysis, pricing checks, and real-time alerts. It gave users faster access to undervalued items while reducing the manual effort involved in tracking and verifying marketplace listings.

Client Background

The client operates in the e-commerce and resale intelligence space. Their operations depend on timely access to online listings and the ability to identify profitable deals based on historical pricing. Their business model involves reselling items sourced from various online platforms, where spotting underpriced listings early can directly impact margins.

Previously, this process relied heavily on manual tracking and limited third-party tools, which led to inconsistent data capture, slower decision-making, and missed opportunities. They needed a solution that could automate data collection, adapt to different platforms, deliver actionable insights, and support long-term growth.

Challenges & Objectives

The objectives and identified challenges of the project were as follows:

Challenges

- Many platforms require session handling, login credentials, and browser simulation to access listing data.

- The volume of data scraped across platforms was too large for manual processing or flat-file storage.

- Identifying underpriced listings required developing logic based on historic data rather than arbitrary thresholds.

- Alerts and insights needed to be easily accessible through a visual, filterable dashboard.

Objectives

- Build a web scraping tool for e-commerce price tracking across authenticated and public platforms.

- Store listing data, images, and metadata in a long-term, structured format using PostgreSQL

- Develop logic to flag deals using basic price analytics and machine learning for detecting underpriced marketplace listings.

- Integrate real-time deal alerts for resale and flipping platforms via Telegram.

- Deliver a dashboard for monitoring item listings and price trends

Conclusion

Relu Consultancy developed a system that helped the client automate their listing analysis and improve the accuracy and speed of deal detection across online marketplaces. With a combination of scraping logic, database structuring, alert systems, and frontend visualization, the automated deal discovery platform for online marketplaces turned raw data into actionable insight. It allowed the client to respond faster, track trends more consistently, and reduce manual oversight. The project demonstrated how well-planned platform monitoring tools can support resale workflows and scale alongside marketplace changes.

Approach & Implementation

The following were the details of the approach and subsequent implementation of solutions:

Web Scraping and Automation

To support both public and login-protected marketplaces, the system was built using Playwright and Puppeteer. It simulated human browsing behaviour, handled logins, and used proxy rotation through tools like Multilogin. These measures reduced the risk of detection while maintaining long-term scraping stability. Custom scraping routines were written per platform, allowing for flexible selectors and routine maintenance.

Data Storage and Processing

Listing information was stored in a PostgreSQL database with proper indexing for fast retrieval. Metadata, images, timestamps, and item descriptions were organised for long-term access and trend analysis. This backend design allowed the platform to serve as a scalable online monitoring platform for pricing intelligence.

Deal Detection and Analysis

Using Python libraries like Pandas and NumPy, the team created logic to track historical pricing trends and detect anomalies. Listings that significantly deviated from baseline values were flagged. A lightweight machine learning model built with scikit-learn was added to improve deal prediction accuracy over time, helping refine what was considered a "good deal."

Alerts and Integrations

A Telegram bot was developed to send real-time deal alerts. Alerts could be filtered by item type, location, price range, or custom parameters. This helped the client reduce the lag between listing appearance and response time.

Dashboard Interface

The frontend was built using HTML, JavaScript, and minimal UI components for clarity and responsiveness. The dashboard for monitoring item listings and price trends allowed users to view historical and live data, inspect flagged deals, and analyze pricing movements across platforms. Simple charts and filters gave structure to the raw data and improved decision-making.

Results & Outcomes

The following were some notable outcomes of the project:

- The platform monitoring tools automated listing collection across several marketplaces, including complex ones like Facebook Marketplace

- Real-time alerts improved the client's ability to act quickly on profitable listings

- Historical data and live insights were centralized in one easy-to-use dashboard

- The scraping routines continued to operate even as site structures changed, thanks to the modular selector design

Key Takeaways

Here are the conclusions we can draw from the project:

- A modular marketplace monitoring system, supported by browser emulation and scraping logic, can reliably track high-volume listing data

- Combining structured databases with basic ML techniques provides a scalable way to detect pricing anomalies

- Real-time notifications reduce manual monitoring and help users act quickly on undervalued listings

- Simple, focused dashboards make large datasets easier to work with, especially in fast-paced resale environments

Betting on Precision — How Robot Automation Gave toto.bg the Winning Edge

1. Executive Summary

Online sports betting is a world of high stakes with high speed. Here, every second counts, and that’s why every decision needs high precision. That’s what the management at toto.bg thought when they realised that repetitive manual betting selections aren’t just inefficient but risky as well.

This case study uncovers how toto.bg designed and implemented a custom-built Robot Automation solution to replace imperfect workflows with a swift and fully automated system. The system involves integrating real-time data via API, allowing seamless CSV uploads and executing betting logic with algorithmic accuracy. The enhancement helped toto.bg reduce human error significantly and boost operational speed. Lastly, it helped unlock scalable efficiency, which is a bonus.

2. Introduction

What seems manageable in the betting world, got complicated after expansion of the betting world, which demanded more stringent rules, putting a pressure on the management to set everything right. It became tough for the toto.bg also to track fast-moving games, loads of data and endless choices happening simultaneously.

Imaging sorting through all the information manually: to track live matches, read user strategies, check the odds and input everything precisely- repeatedly. It not only slowed down the process, but came with more stress, tiresome and messy.

toto.bg was seeking a suitable solution to address this issue.

Enter: Robot Automation.

It enabled coupling user logic with real-time sports data, transforming the arduous manual betting tasks into a sleek automated process.

3. Project Overview

Project Name: Robot Automation

Objective: Automate betting selections to optimise speed, precision, and scalability

Key Features:

- Real-Time API Integration: Pulls live match data from toto.bg

- CSV-Based User Interface: Allows users to upload their betting strategies with drag-and-drop ease

- Automated Decision Engine: Executes betting choices with zero manual input

10. Conclusion & Future Outlook

Robot automation began as a tool; however, it has ultimately evolved into a transformative technology. It became more than an optimiser. It showcased how intelligent workflows can pinpoint accuracy, scale effortlessly and empower business users to take control.

We are now exploring more possibilities with Robot Automation. These include:

- Predictive Betting Inputs via historical match data

- Multi-Language Interfaces for international rollout

- Cloud Deployment for real-time sync across devices.

Let’s conclude this case study with a statement: The future of betting automation isn’t just possible; it’s programmable, too.

4. Workflow & Process

Step 1: Plugging into the Pulse